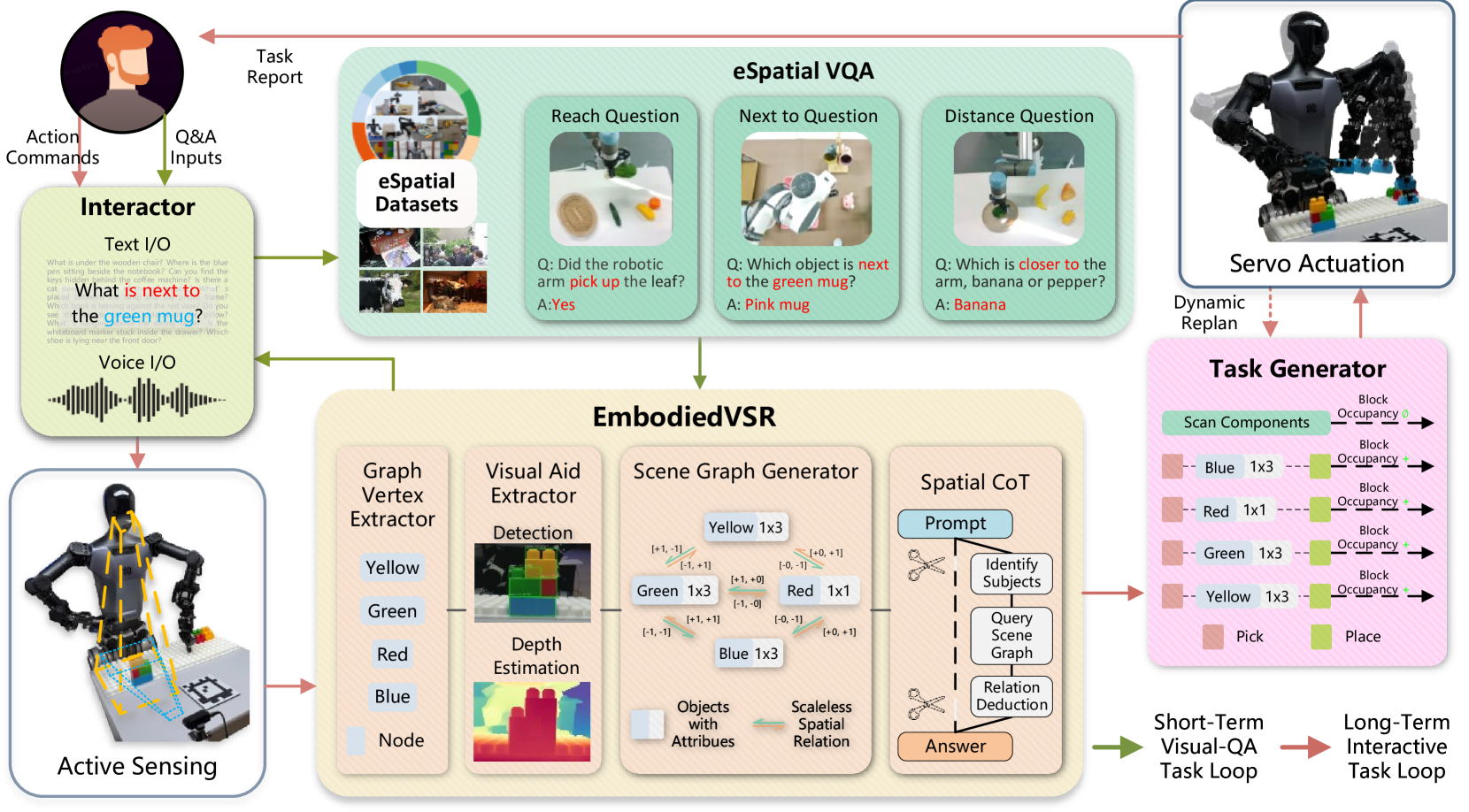

While multimodal large language models (MLLMs) have made groundbreaking progress in embodied intelligence, they still face significant challenges in spatial reasoning for complex long-horizon tasks. To address this gap, we propose EmbodiedVSR (Embodied Visual Spatial Reasoning), a novel framework that integrates dynamic scene graph-guided Chain-of-Thought (CoT) reasoning to enhance spatial understanding for embodied agents. By explicitly constructing structured knowledge representations through dynamic scene graphs, our method enables zero-shot spatial reasoning without task-specific fine-tuning. This approach not only disentangles intricate spatial relationships but also aligns reasoning steps with actionable environmental dynamics.

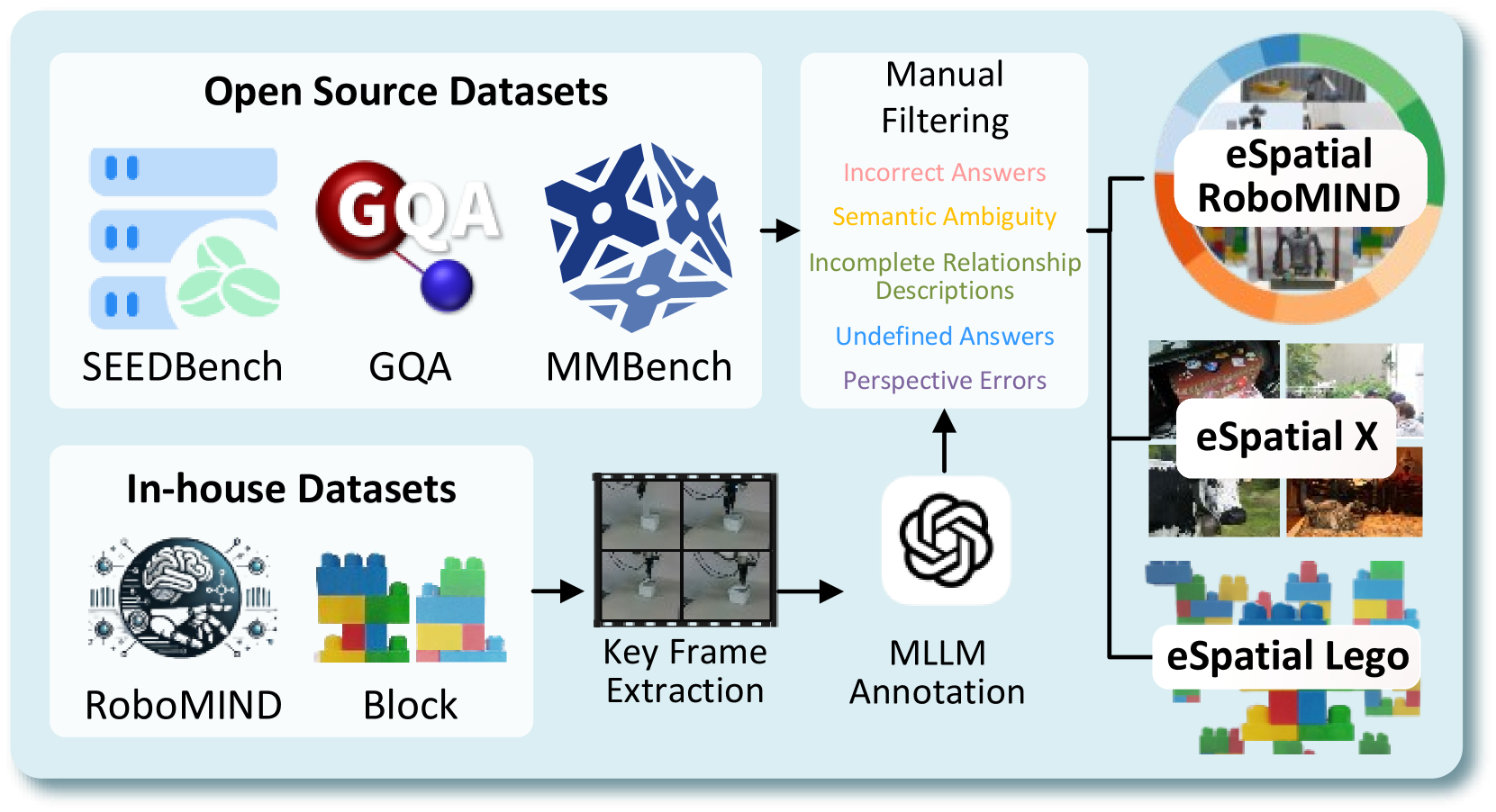

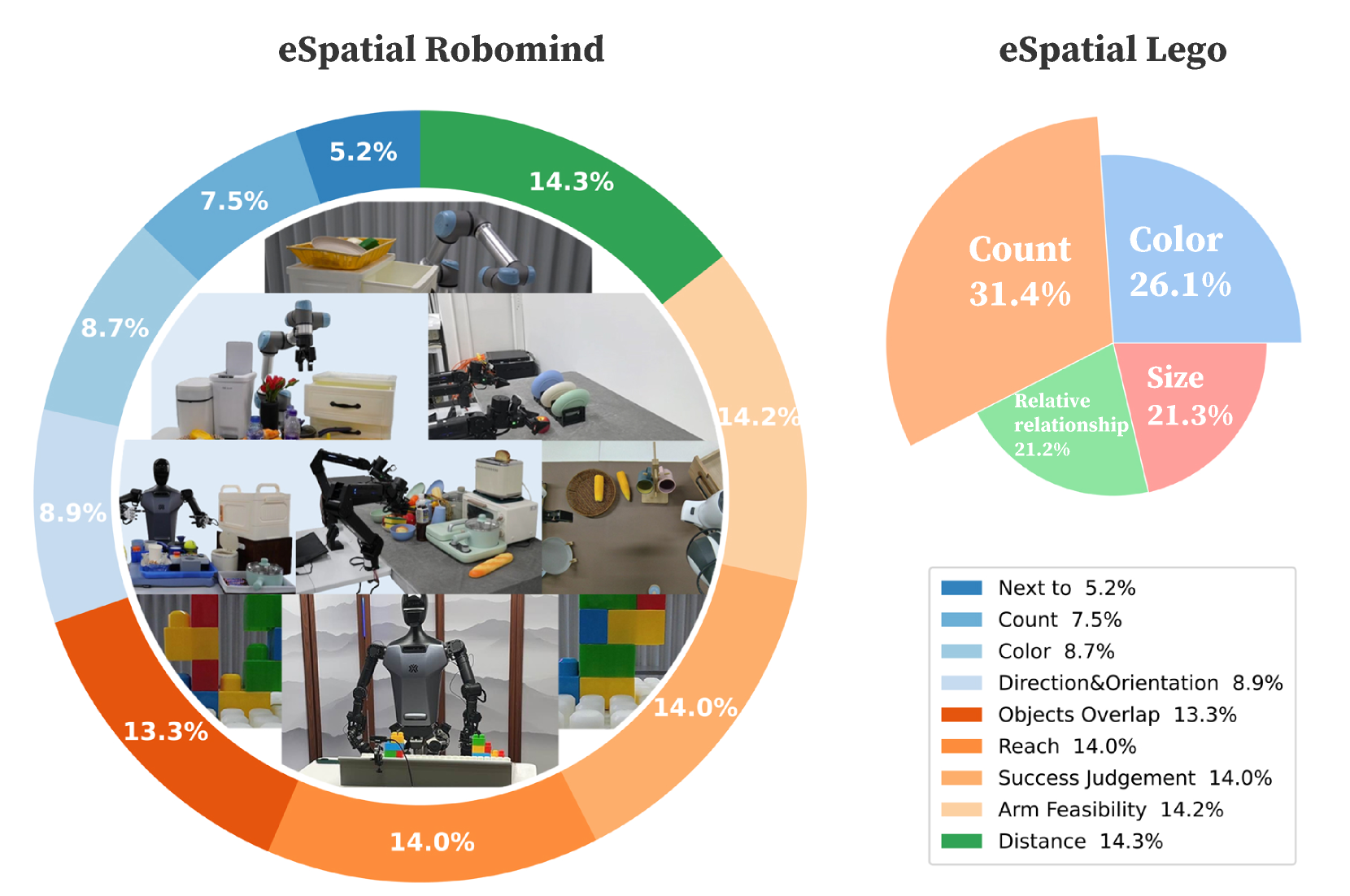

To rigorously evaluate performance, we introduce the eSpatial-Benchmark, a comprehensive dataset including real-world embodied scenarios with fine-grained spatial annotations and adaptive task difficulty levels. Experiments demonstrate that our framework significantly outperforms existing MLLM-based methods in accuracy and reasoning coherence, particularly in long-horizon tasks requiring iterative environment interaction. The results reveal the untapped potential of MLLMs for embodied intelligence when equipped with structured, explainable reasoning mechanisms, paving the way for more reliable deployment in real-world spatial applications.

EmbodiedVSR fuses dynamic scene graph generation, embodied spatial chain-of-thought reasoning and robotic control, enabling robots to grasp spatial object relationships. Additionally, we developed the eSpatial-Benchmark and dataset, further empowering the application of multimodal large models in embodied intelligence scenarios.

Many benchmark datasets have been published with the advancement of MLLMs. Though the models continuously get higher scores in these benchmarks, our embodiment experiments showed an undesired success rate when the tasks require an accurate visual understanding of the scene. This phenomenon raises a concern about whether the Q&A-pair and the evaluation indices are relevant to the scene understanding of embodied intelligence, as they require the knowledge to guide the planning and action. Consequently, we concluded that the current MLLMs are still struggling with the five significant visual challenges mentioned.

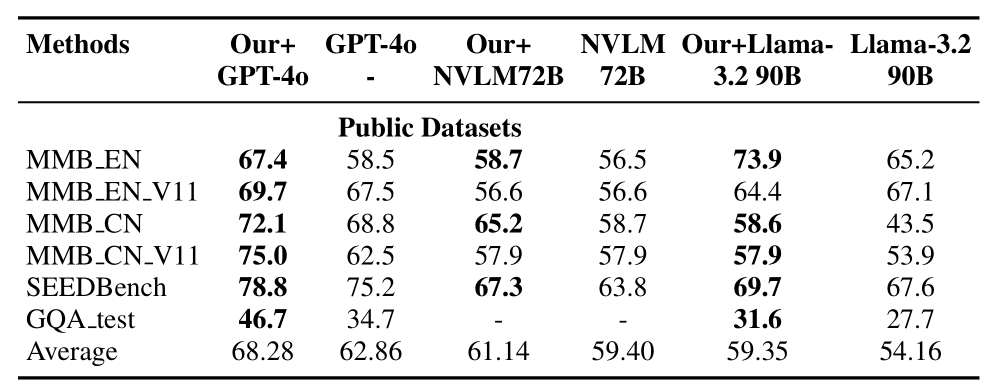

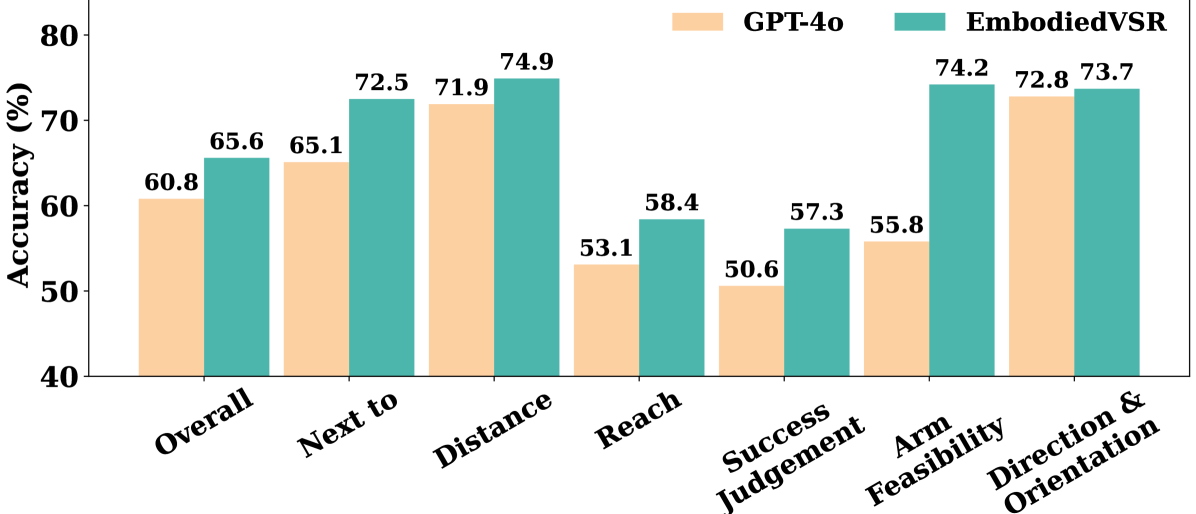

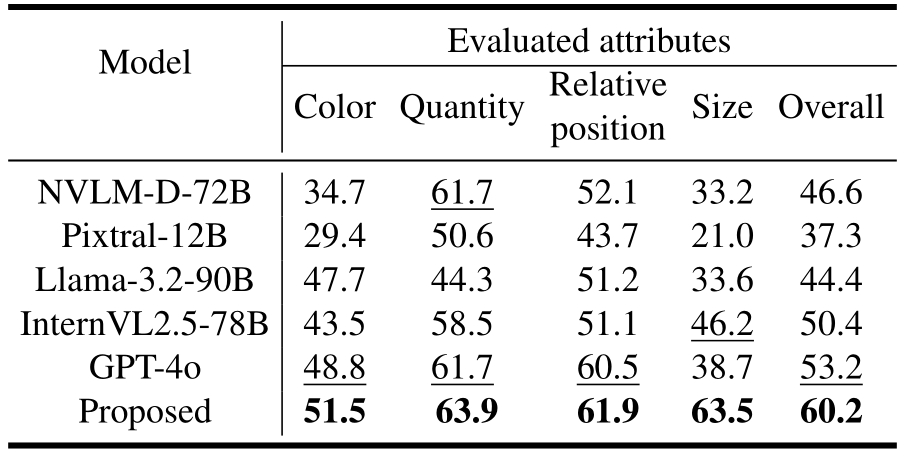

To validate the advancement of our method, we evaluated EmbodiedVSR and baseline models (GPT-4o, NVLM-D- 72B, and Llama-3.2 90B) on our eSpatial dataset including eSpatial-X, eSpatial-RoboMIND, and spatial-Lego. To fur- ther validate the efficacy of our method in embodied scenar- ios, we established an automated LEGO assembly system on the Tien Kung humanoid robot. Initially, we employed EmbodiedVSR + GPT-4o to deconstruct the LEGO sample, subsequently transmitting the deconstruction results to the robot to assemble an identical LEGO structure.